Industrial control system cybersecurity is today largely focused on securing networks, and efforts largely ignore process control equipment that is crucial for plant safety and reliability, leaving it woefully vulnerable, an expert warns.

In a recently published series of three white papers collaboratively produced by industrial control system (ICS) cybersecurity firm Dragos and General Electric (GE), the companies make the case that increased connectivity could make both engineering and business sense. "As industrial processes evolve, sensors provide opportunities for increased visibility into process; data integration and analysis improve our understanding of process; machines become more capable of control; [and] data connectivity becomes increasingly possible." However, "traditional enterprise network security may be in conflict with emerging business needs for industrial control processes," the companies note.

Information technology (IT) security, for example, recommends isolating systems and information, least-privilege user-access, and encryption. "In the IT security space, tight relationships have been developed between the businesses and IT security, so that security is carefully built around business requirements. In industrial systems, we have noted some conflicts that resulted in either sub-optimal security and vulnerable processes or good security at the costs of suboptimal process or unnecessary security expense."

What it means, according to the companies, is: "We need better collaboration between process designers and engineers, and the security teams that help protect industrial control networks." Engineers and designers should look to work with their security teams to build non-intrusive security into the ICS, "which will produce more value than relying simply on later bolt-on solutions." That will require engineers to understand these connectivity needs and cybersecurity threats, but it will also require two-way communication and collaboration between engineers and security professionals, who should tailor security around engineering and business needs. This type of collaboration, they note, will be especially important as IT merges into the industrial operations environments in an Industrial Internet of Things (IIoT) era.

For Joseph Weiss, a registered professional engineer, who is a fellow at the standard-setting nonprofit International Society of Automation (ISA) and currently serves as managing director of ISA Control System Cybersecurity, the conversation between engineers and process architects is important in light of the much-discussed convergence between IT and operational technology (OT). Weiss is an energy industry veteran who helped shape several power sector security primers and implementation guidelines over decades, first as an expert at the Electric Power Research Institute, and more recently, as a member of working groups that carve out cybersecurity standards at the International Electrotechnical Commission (IEC) and ISA. In an interview with POWER in January, Weiss said, "I believe the biggest overall problem control system/operations has with the cybersecurity community is that community's focus on ‘protecting the network,' rather than ‘protecting the operational systems/process.' "

The Skewed Evolution of ICS Security Responsibilities

The industry's distorted priorities are rooted in a complex history, Weiss explained. For much of its century-old existence, the grid was monitored and controlled without internet protocol–based networks. When networks were eventually adopted, they served a support function, and for the most part, control system cybersecurity continued to be centered on protecting the control systems and processes they monitor and control. According to Weiss, the turning point arrived after September 11, 2001, when, owing to a heightened emphasis on security, companies began shifting cybersecurity from operational organizations to IT. Today, they fall under OT organizations. "Before then, the physical equipment such as turbines, pumps, motors, protective relays, and their associated processes were the sole responsibility of the relevant technical organizations," said Weiss. "When cybersecurity was transferred to IT (and now OT), the responsibility for cybersecurity of the control system field devices (that is, process sensors, actuators, drives, and the like) and the engineering equipment fell off the table."

Weiss asserts that the fallout from this development ultimately promoted IT approaches over engineering approaches. The issue has endured. "This can be seen from approaches such as the Common Vulnerability Scoring System (CVSS) for rating vulnerabilities, where the scores are assigned to flaws affecting ICS. The same can be seen by the Department of Homeland Security's Industrial Control Systems Cyber Emergency Response Team assigning criticality to vulnerabilities," he said. "However, neither approach addresses the impact of the vulnerability on the actual plant equipment and process. That is, what is the impact of a cyber vulnerability on a specific pump, valve, motor, relay, and others?"

According to Weiss, the confusion is also entrenched in the term, "operational technology," which he says "has become a rather nebulous expression that's been applied to all non-IT assets." Today, OT solutions generally focus on control system networks as opposed to control system equipment. "Consequently, it's not clear that engineers/process architects would consider themselves OT," Weiss said. One reason that is dangerous is because it promotes a lack of awareness of control system cybersecurity by engineers and technicians who are responsible for the control systems. "Control systems are complex systems with complex systems interactions. Generally, detailed systems interaction studies are performed including Failure Modes and Affects Analyses (FMEA), Hazard Operations analyses (HazOps), et cetera. What is often missing from these analyses are cyber considerations," he noted.

A Dangerous Gap in Cybersecurity

Weiss warned, starkly, that the historical shift in cybersecurity focus has left a fundamental gap that leaves critical components vulnerable. "Cybersecurity is defined by the National Institute of Standards and Technology (NIST) as electronic communications between systems that affect confidentiality, integrity, or availability," he explained. Meanwhile, control systems at power plants today use a combination of commercial off-the-shelf human-machine interfaces (HMI), generally Windows, with internet protocol (IP) networks, generally ethernet, along with field devices, such as process sensors, actuators, and drives with their field-level networks. Owing to the focus on networks, cybersecurity has evolved into a "top-down approach by identifying malware and network anomalies in the IP networks," a process commonly known as "network anomaly detection." According to Weiss, "this is because for IT, the end goal is to assure that data has not been compromised. The IT approach has been expanded to also address control systems by monitoring OT control system ethernet networks." However, while he conceded the network monitoring approach is "necessary," he said it is "not sufficient" to secure control systems and prevent long-term equipment damage.

"That is because network monitoring can neither cyber secure legacy control system devices that have no cybersecurity or authentication nor identify which specific control system devices (such as pumps, valves, motors, and relays) are vulnerable to network attacks. Consequently, the IT/OT approach cannot support reliability or safety considerations nor cyber secure the system of systems that make up control systems." That, he said, is "an intractable problem."

A Basic Problem

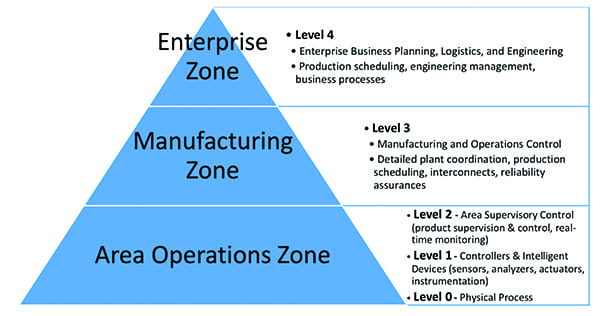

A useful way to understand the issue is using the Purdue Model for Control Hierarchy, which is a logical framework that essentially segments an enterprise into zones (see sidebar, "The Purdue Model Is Evolving"). "In far too many instances, the cybersecurity focus has been exclusively at the Levels 2–4 because these levels generally use commercial off-the-shelf (COTS) technology and ethernet communications," Weiss said. "This is the technology that most IT organizations (end-users and vendors) are familiar with and have available training and cybersecurity tools." However, the cyber impacts at Levels 2–4 are "generally short-term denial-of-service events unless they are used to compromise the Level 1 devices or the Level 0 process. Conversely, Level 1 devices are well-known to the operations and maintenance staff but generally not to IT and security staffs or governmental policymakers," he said.

|

The Purdue Model Is Evolving In the 1990s, T.J. Williams, a researcher at Purdue University's Laboratory for Applied Industrial Control, suggested a unique method for defining the place of the human in a computer-integrated plant or enterprise using an expanded interpretation of the Purdue Enterprise Reference Architecture to essentially incorporate the connection of information technologies with manufacturing processes.

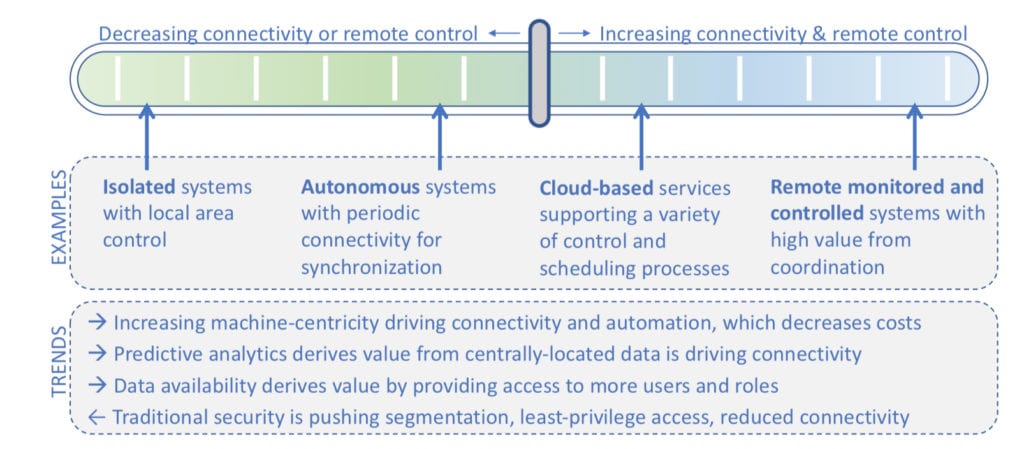

According to a white paper authored jointly by industrial control system (ICS) cybersecurity firm Dragos and General Electric (GE), the Purdue Model (Figure 1) "was intended to be flexible." The encoding of the model into standards, such as ANSI/ISA-95 to develop an automated interface between enterprise and control systems (also known internationally as IEC 62264), has "given the impression that it is rigid and well-defined." The model also provides foundational language for control systems security standards like IEC 62443 and NIST SP800-82, the company noted. "The basic idea (and the way that it was originally proposed) is easiest to digest if we think of an enterprise as a centrally run and top-down hierarchy that is decomposed into five levels," even though many industrial processes are increasingly non-hierarchical, especially as the industry evolves to the industrial internet of things (IIoT), the companies noted. The five levels and their functions are summarized as follows. Level 0–Physical Process. This level defines the actual physical processes. Level 1–Intelligent Devices. This level includes process control equipment that senses and manipulates the physical processes. Devices in this level include process sensors, analyzers, actuators, and related instrumentation. Level 2–Control Systems. This level encapsulates the control systems and provides supervisory control and monitoring of the controllers. It includes supervisory control and data acquisition (SCADA) software, human-machine interface (HMI) devices, alarms/alert systems, and control room workstations, all of which may communicate with systems in Level 1. Level 3–Manufacturing and Operating Systems. Devices in this level manage control plant operations and include applications, services, and systems such as the data historian, reliability assurance, production scheduling and reporting, engineering workstations, and remote access services. These systems communicate with systems in Level 4 through a "demilitarized zone," a sub-network placed between an industrial network and an enterprise network to add an additional layer of security to the trusted network. Level 4–Business and Logistics Systems. Used at the enterprise level, this level covers business and logistics systems, and is sometimes referred to as the IT side of the organization. It comprises reporting, scheduling, inventory management, capacity planning, operational and maintenance management, email, and phone services. In their white paper, Dragos and GE note that the reason "many find it difficult to apply the Purdue Model is that the original application of the Purdue Model was created when most production systems were isolated and weakly connected outside of the enterprise." Because modern industrial systems "are increasing autonomous (machine-centric), connected (data moves, is integrated, and analyzed), and accessed by more diverse roles for tuning and optimization," the companies suggest a "sliding-scale" model of connectivity may be more appropriate over a hierarchy-based model (Figure 2). "As our evolving Industry 4.0 ICS take more advantage of IIOT technologies, we will be dealing with requirements for connectivity, access, remote control, and data sharing. It is important that these requirements drive new security and are deployed in collaboration with security engineers, rather than have security forbid the requirements or deploying without security because of the inability for engineers to collaborate to lead security solutions," the companies said.  2. The "sliding scale" of connectivity incorporates the entire Purdue Model spectrum, including processes with low connectivity (on the left side of the scale). But it also incorporates emerging information and technology opportunities that could enable cost savings or additional revenue from data exploitation. Source: "Design and Build Productive and Secure Industrial Systems," Kenneth Crowther (General Electric), Robert M. Lee (Dragos) December 2018 |

The issue is compounded by today's blending of modern IT technologies into ICSs. "[I]t is getting harder to separate Levels 1, 2, and sometimes even 3," Weiss noted. "Incorporating a web server directly into a controller or actuator begs the question–is it Level 1, 2, 3, or some new combination?" To that end, Weiss argues it is essential to understand what Level 1 devices are and what they do, and then to recognize why cybersecurity of Level 1 devices are an issue.

For example, some Level 1 devices use embedded systems with basic security (password protection) or have no cybersecurity; many are now IP-based or have wireless capabilities; many traverse multiple layers and write data back to systems in Levels 2, 3, or 4; and some rely on physical security such as failsafe jumpers (write-protected) and are located in hazardous zones. In the latter case, Weiss suggested workers may be reticent to enter the hazardous areas during incidents; consequently, the devices may be connected using insecure methods. Furthermore, field communication protocols such as wired and wireless HART, Profibus, Foundation Fieldbus, and others have been demonstrated to be cyber vulnerable.

The Safety and Security Nexus

The crux of the issue–and one not easily or widely recognized–is that the compromise of Level 1 devices, whether intentionally or unintentionally, "can impact the physics of the process thereby causing physical damage and/or personal injury with attendant long-term consequences." For Weiss, this points to a common misconception that safety and security are synonymous. "They are not. You can be cyber secure without being safe. This is because safety is really dependent on the Level 0 and 1 devices, and instrumentation networks, not the higher-level IP ethernet networks," he said. "The real safety and reliability impacts come from manipulating physics, not data."

Weiss cited a long list of major disasters where issues with Level 1 field instrumentation played a significant role. Examples of instrumentation catastrophes include the Taum Sauk reservoir/dam failure, the Texas City refinery explosion, and the Three Mile Island core melt. Among more modern cybersecurity-related examples are the Aurora vulnerability, which Weiss noted uses cyber (electronic communications) to reclose breakers out-of-phase with the grid and causes physical damage to any alternating current rotating equipment and transformers connected to the affected substation. "There is no malware involved and therefore would likely not be detected by network monitoring," he noted. The Stuxnet payload also involved modified control system logic, not malware, he said. "Malware can cause impacts such as the Ukrainian cyberattacks–hours to day-long outages or downtime. Manipulating physics causes physical damage leading to months of downtime," he warned.

Murky Solutions

What's worse, according to Weiss, is that "there is minimal cyber forensics or training to detect these issues which can easily be mistaken for unintentional events or ‘glitches.' "

One issue he highlights concerns process sensors, which are Level 1 devices that function as the input to all controllers and HMIs to safely and reliably monitor and control a process. "An example is the temperature sensors that monitor turbine/generator safety systems to prevent the generators from operating in unstable or unsafe conditions. These process sensors are integral to the operation of the system and cannot be bypassed. If the temperature sensor is inoperable for any reason, it can prevent the turbine from restarting, whether in automatic or manual. The lack of generator availability can prevent grid restart from occurring."

But while network cybersecurity anomaly detection systems generally assume that legacy process sensors can provide secure, authenticated input, no cybersecurity, authentication, or adequate real-time process sensor forensics is generally available, he said. That omission is also rooted in a mismatch between technology evolution and network capability that has led security professionals to inspect packets, rather than electrical signals, he explained. In the late 1990s and 2000s, he said, as more systems adopted Windows, the operating system was too slow to keep track of the high-frequency milliseconds of noise that engineers generally depend on for root cause analyses to understand and monitor vibration processes, anomalies, and other criteria. "So, the serial-to-ethernet converter vendors decided to filter out all that noise because Windows could not use it anyway."

Few solutions exist to remedy this issue. Only one company to date, Israeli-based OT solution firm SIGA, is addressing the validity and authentication of Level 1 legacy devices by examining the electrical signal characteristics in real-time, Weiss noted. "Many other ICS firms are looking at sensor security at the packet level. Only SIGA is looking at it at the raw signal level," he added.

For now, it is apparent a larger private-public effort will be needed to remedy the problem. Weiss said he has repeatedly warned, in multiple blog posts and interviews, as well as in comments to policymakers and standard-setting working groups that existing cybersecurity and safety standards do not adequately address the security and authentication vulnerabilities of legacy field devices and their networks. Because there is no means to correlate network anomalies with physical processes or individual pieces of equipment, it is clear "a network-based approach cannot provide this critical information," he said.

Finally, solutions will need collaboration between engineers, designers, and security professionals. "Unless cyber threats can explicitly compromise safety and reliability, I do not believe operations will really care about security. That is not to say that IT won't be interested as there is substantial high-value data that can be compromised," Weiss said. ■

–Sonal Patel is a POWER associate editor.